Notes from a tiny data therapy workshop

I recently took a data therapy workshop taught by Rahul Bhargava at the MIT Media Lab. Our task was to identify a data set, find a story within the data set, and visualize the data in a way that facilitates the telling of the story.

Storyfinding

I was inspired by Jeff Walker’s kayak deployment of the Riffle-ito (an Open Water Project sensor with a GPS shield attached to it) back in early August and wanted to do something with the data collected from it. The Riffle-ito sensor measures temperature, time, and — with the GPS shield — location. Jeff was kind enough to send me the raw data (see his GitHub for more).

The basic story was this: Man deploys water sensor on kayak trip; travels to an outfall near the river bank; returns home; looks at the data; and finds little temperature variation.

Not exactly breaking news. However, in spite of the sensor data showing little change in temperature, I wanted to play with the concept of “mundane data” and think about how else it could be used.

Mapping the fourth dimension

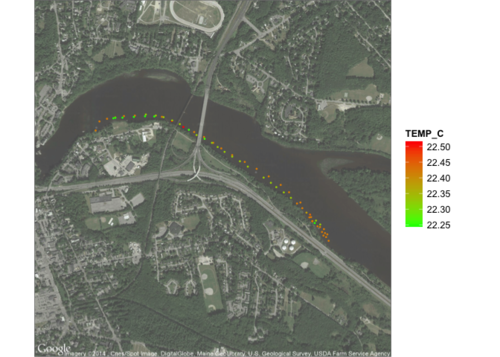

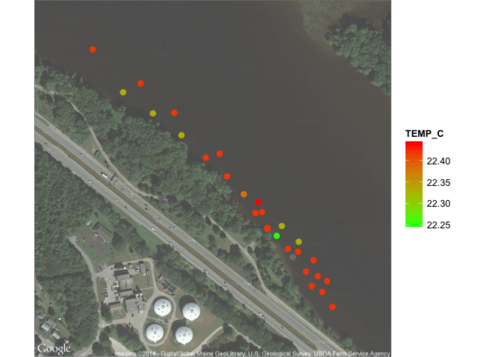

This is the original markup of Jeff’s data, using the Google Maps library in R. You can see the GPS data and the temperature data plotted over time and space:

The dots represent individual data points from the sensor, and the colors correspond to the temperature readings at the time they were taken.

I was fascinated by the spatial mapping of the data, but the R markups were relatively static (though gorgeous). So, I thought it might be fun to highlight the movement of Jeff’s kayak as it moved through on river through time by plotting the same data on CartoDB by animating it:

Further renderings

Then I wondered what the data might look like if broken down by the minute. Using Tableau, I made a simple bar graph:

While looking at the bar graph, I tried to think of other way to represent the data. The bars reminded me of piano keys, which then prompted the thought:

What if you could “play” data like you play a musical score?

If you imagine the individual bar charts as “notes” and the height of the bar chart as a proxy for “pitch” or “tone,” you could almost map out a musical “scale” across the graph.

Data auralization

I found an interactive music project out NYU’s MusEDlab and the Chamber Music Society of Detroit, which, while watching and listening to a person play the piano, lets the viewer use the computer keyboard to play along. The computer keys are programmed to play within the same scale as the musician, so the viewer ends up augmenting the recorded performance with their own interpretive one.

Taking a MakeyMakey, which is an Arduino-based board that lets you create on/off buttons out of pretty much anything that conducts electricity (i.e. pencil lead-based drawings, bananas, cups filled with water, etc.), I mapped my computer keyboard to the board, then the board to some apples. Thus, instead of playing my computer keys, I could then use the apples as an interface to the piano sounds. (This is the Sparkfun tutorial I followed.)

“Playing” data as music using the MakeyMakey and NYU’S Virtual Keyboard

Why I’m interested

Data legibility

Most of the time, representations of data are ocularcentric— that is, very focused on image and spectacle. I’m interested in other ways to represent and experience data through other senses. Check out the Coqui water sensor for a great example of how water conductivity — a reciprocal of water resistivity — can be output as audio.

Data as interpretive

The ability to “play” the data as music leaves the interpretation up to the person viewing it. For example, one could start at any note within the scale. There is no right or wrong way to do it. This backgrounds the fact that data itself is something that is interpreted and not fixed or purely objective.

Data as interactive

This was a way to demonstrate how data can be interactive. Not only that, we can experience data through different interfaces. The MakeyMakey enables us to [re]connect immaterial data sets to the the actual material world.

Data as maybe-not-that-interesting

Let’s be honest. Data aren’t always interesting. Often, when we talk about things like “data-driven storytelling,” we can overemphasize results over process or anything else. This project was a way to curtail that bias toward “conclusive” data and sort of an ode to the mundane data that permeates our everyday lives.

Questions I have

As I’m exploring the range of projects that span sensor journalism, I am thinking about what “journalism” itself means and entails. At the moment, my concept of journalism is deliberately liberal, for the reason that the field is constantly being recast in different ways with the advent of new technologies. For the purposes of looking at sensor journalism, I define “journalism” as a process of documenting the world.

- What forms of documentation qualify or disqualify as sensor journalism?

- What should the standards or expectations be for documenting the process of gathering and representing the data in various forms?

- Tools for visualizing/representing historical data are increasingly accessible online; what kinds of tools might be helpful to develop for representing sensor-based data (historical and realtime)?

Links and references:

The final slides from my presentation are here.

The Public Lab research note version is here.

The data therapy workshop was taught by Rahul Bhargava at the MIT Media Lab.